Top Strategies for Detecting Bots Ingame in 2025

16 Jul 2025 • 10 min read

Top Strategies for Detecting Bots Ingame in 2025

16 Jul 2025 • 10 min read

Bot detection in gaming has become crucial as modern bots threaten the integrity of online gaming environments. As detection accuracy continues to improve and more banned accounts are identified, the battle against automated players evolves. The automated programs represent one of the greatest threats to Massive Multiplayer Online Games today. For perspective, World of Warcraft alone generated an estimated $150 million in monthly subscription fees at its peak, highlighting the significant financial incentives for implementing effective bot detection techniques.

Modern bots have become increasingly sophisticated, using human-like mouse movements, CAPTCHA solving capabilities, and artificial intelligence to bypass traditional bot detection systems. Additionally, these programs can make hundreds or even thousands of requests per minute, significantly impacting the game economy and player experience.

Understanding Bot Behavior in Online Games

Online gaming environments face an escalating challenge from sophisticated bot programs that mimic human players. A thorough understanding of bot behavior forms the foundation for effective detection and prevention strategies.

Types of bots in MMOGs and FPS games

Gaming bots generally fall into several distinct categories based on their sophistication and purpose. In MMORPGs, "smart" bots precisely imitate human movements, can auto-aim, shoot at tracked targets, switch between targets, and even employ evasive tactics when threatened. In contrast, "silly" bots perform more basic functions like staying at bases and shooting at enemies within range, or following predetermined movement patterns. The simplest form, known as "clickers," merely controls keyboard and mouse functions to maintain presence in games without active participation.

First-person shooter bots have their own evolutionary history. Early examples like Quake's Reaper Bot navigated maps by following human player movements while shooting with near-perfect accuracy. Over time, FPS bots became more sophisticated, learning to follow invisible waypoints or chase after items to create more convincing gameplay patterns

Common bot objectives: score vs. asset farming

Bot operators pursue varied objectives depending on the game type. In competitive games, bots might aim for score advantages, whereas in MMORPGs, they typically focus on resource acquisition. Indeed, research shows that game bots spend 60% of their time harvesting more than 5,000 items daily—a volume practically impossible for human players to achieve.

The economic motivation behind botting is substantial. According to a 2021 report, the broader bot market costs businesses up to $250 million annually, with gaming representing a significant sector. Bot operations include real money trading (RMT), power leveling services, and gaining competitive advantages. Furthermore, bot detection analysis reveals that the cumulative ratio of "earning experience points," "obtaining items," and "earning game money" for bots approaches 0.5, compared to just 0.33 for human players—clearly demonstrating their profit-focused nature.

Why Bot Detection Is Critical In-Game

Player Experience Impact

Malicious bots degrade the user experience by introducing unfair advantages and disrupting gameplay. Players encounter threats such as automated opponents that win unfairly, causing frustration and loss of trust. Malicious bots also slow down game servers, leading to lag and poor user experience. During peak times, malicious bots can account for over 90% of login traffic, overwhelming systems and increasing user dropout rates. User experience suffers as bots exploit vulnerabilities, steal rewards, and erode the sense of achievement. Community-driven detection efforts, combined with AI, have improved user experience by reducing false positives and restoring trust in game integrity.

Economic Risks

Bots create significant economic risks for game operators and players. Malicious bots engage in in-game currency theft, scams, and money laundering, threatening the financial stability of gaming ecosystems. Security measures must address these threats to prevent revenue loss and protect valuable assets. For example, Blizzard’s ban of over 100,000 World of Warcraft bot accounts in 2015 caused major fluctuations in in-game commodity prices, demonstrating the economic impact of bots. Malicious bots inflate markets with farmed resources, devaluing player achievements and undermining the game’s economy. The broader bot market costs businesses up to $250 million annually, driven by real money trading and unfair competition. Security teams must use multi-layered defenses, including CAPTCHA and device fingerprinting, to counter these sophisticated threats and safeguard both user experience and economic value.

Threats to Game Integrity

Bots present persistent threats to the integrity of online games. Developers face complex cyber threats as malicious bots evolve, using advanced techniques to bypass traditional defenses. These threats undermine fair competition and disrupt the balance intended by game designers. Security threats from bots include code manipulation, unauthorized access, and exploitation of vulnerabilities. Malicious bots can reverse engineer game logic, inject code, and manipulate APIs, making robust security essential.

Bot Detection Methods and Technologies Ingame

Game developers in 2025 rely on advanced methods for detecting a bot ingame. The most effective strategies combine behavioral biometrics, machine learning analysis, and anomaly detection. These approaches deliver high-accuracy detection while minimizing disruption to genuine users. Clean data remains essential for reliable results.

CAPTCHA for Suspicious Behavior Triggers

When suspicious activity is detected, CAPTCHA challenges provide an immediate verification layer. Traditional text-based CAPTCHAs have become less effective as advanced bots can solve them with up to 99.8% accuracy. Instead, modern games implement more sophisticated variants like GeeTest Adaptive CAPTCHA, which analyzes user behavior with frictionless interaction. For existing customers exhibiting unusual patterns, implementing two-factor authentication offers an additional security layer.

IP Reputation and Device Fingerprinting Integration

Device fingerprinting collects distinctive attributes from users' hardware, software, and network configurations to create a unique identifier. This technique helps detect suspicious activities by identifying when multiple accounts are accessed from the same device within a short timeframe. IP reputation services flag addresses associated with previous bot activities, enabling developers to implement appropriate countermeasures against recognized threats.

Behavioral Biometrics

Behavioral biometrics focuses on analyzing how users interact with the game environment. This method leverages unique patterns in user behavior to distinguish between bots and humans. Behavioral analysis provides a robust layer of security, as bots struggle to mimic the subtle nuances of human actions.

Movement and Input Patterns

Detecting a bot ingame often starts with monitoring movement and input patterns. Human players display natural variability in their actions, such as inconsistent mouse movements, irregular keystrokes, and spontaneous decision-making. Bots, on the other hand, tend to follow repetitive, predictable paths and exhibit uniform timing in their inputs.

- Behavioral analysis tools track these patterns in real time.

- AI models flag suspiciously consistent or mechanical behaviors.

- Developers use this data to trigger further investigation or automated responses.

A recent industry survey found that 59% of gularly encounter unauthorized AI bots gamers regularly encounter unauthorized AI bots in online games. 71% report that bots damage multiplayer competition, and 18% have abandoned games due to bot interference. These statistics highlight the urgent need for precise behavioral detection.

Social Interaction Analysis

Bots often fail to replicate authentic social interactions. Behavioral analysis extends to chat patterns, group participation, and in-game communication. Human players engage in dynamic conversations, use slang, and respond contextually. Bots typically generate generic or contextually irrelevant messages.

- Detection systems analyze chat logs for unnatural language or repetitive phrases.

- AI-driven behavioral analysis identifies accounts with limited or scripted social engagement.

- Some platforms now offer human-only gameplay modes, using digital identity verification to ensure authenticity.

A partnership between leading game studios and digital identity providers has enhanced bot detection and player authentication. This collaboration enables more secure and enjoyable user experiences.

Machine Learning Analysis

Machine learning has transformed the landscape of detecting a bot ingame. AI models process vast amounts of behavioral data, learning to recognize subtle differences between bots and legitimate users. These systems adapt over time, improving detection accuracy as new threats emerge.

Machine learning analysis enables real-time detection of bots by continuously updating models with new data. This approach reduces false positives and ensures that genuine users experience minimal disruption. Behavioral analysis powered by AI allows for scalable, automated monitoring across millions of accounts.

Anomaly Detection

Anomaly detection identifies unusual patterns in user behavior that may indicate bot activity. AI-driven anomaly detection methods outperform traditional rule-based systems by processing large volumes of data in real time. These models adapt to evolving threats, learning from new patterns and reducing false positives.

AI models such as Neural Networks, Random Forest, and Gradient Boosting combine multiple detection techniques for robust results. Studies show that Neural Networks outperform other algorithms in detecting anomalies and bot attacks, especially in complex environments like IoT networks. This adaptability ensures that detection systems remain effective as bots become more sophisticated.

Detecting a bot ingame now depends on a multi-layered approach. Behavioral biometrics, machine learning analysis, and anomaly detection work together to deliver precise, real-time results. These methods protect user experience, maintain game integrity, and ensure fair competition for all players.

How to Detect and Block Bots in Games

Game developers in 2025 rely on advanced anti-bot solutions to maintain fair play and robust security. These solutions use cutting-edge technologies to detect and block malicious bots before they can disrupt gameplay or compromise sensitive data. The integration of AI and machine learning has transformed the landscape, making detection more accurate and adaptive.

Pattern Recognition

Pattern recognition stands at the core of modern anti-bot solutions. Security teams analyze vast datasets to identify subtle differences between human and automated behavior. For example, bots often repeat the same actions with precise timing, while human players show natural variation. Anti-bot solutions use pattern recognition to flag suspicious activity, such as repetitive login attempts or identical movement paths. Device fingerprinting enhances this process by creating unique profiles for each device, making it harder for bots to evade detection. CAPTCHA systems add another layer, challenging suspicious users with tasks that only humans can solve. Together, these tools form a powerful defense against evolving threats.

Security experts recommend combining pattern recognition with real-time monitoring for the best results. This approach allows anti-bot solutions to adapt quickly as new bot tactics emerge.

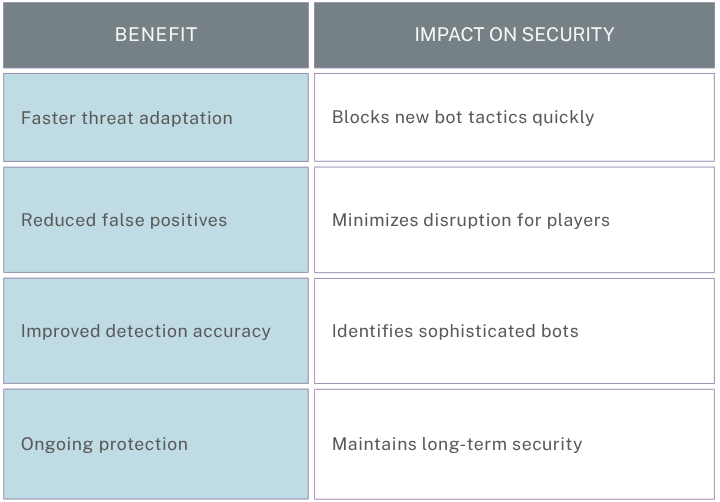

Continuous Model Training

Continuous model training ensures that anti-bot solutions stay ahead of attackers. Security teams feed new data into machine learning models, allowing them to learn from recent threats and adapt their detection methods. This process reduces false positives and improves accuracy over time. Anti-bot solutions that use continuous training can recognize new bot behaviors that traditional systems might miss. For example, the GeeTest Bot Management Platform updates its models regularly, providing real-time protection against the latest threats. This proactive approach keeps gaming environments secure and fair for all players.

The table below highlights the benefits of continuous model training:

Anti-Tamper Technologies

Anti-tamper technologies play a critical role in modern anti-bot solutions. These tools protect game code and assets from unauthorized modification. Security teams deploy anti-tamper measures to detect and block attempts to alter game files or inject malicious scripts. For example, runtime application self-protection (RASP) monitors the game environment for suspicious changes. Device fingerprinting and server-side validation work together to ensure that only legitimate clients can access sensitive features. Anti-tamper technologies also support CAPTCHA integration, making it even harder for bots to bypass security checks.

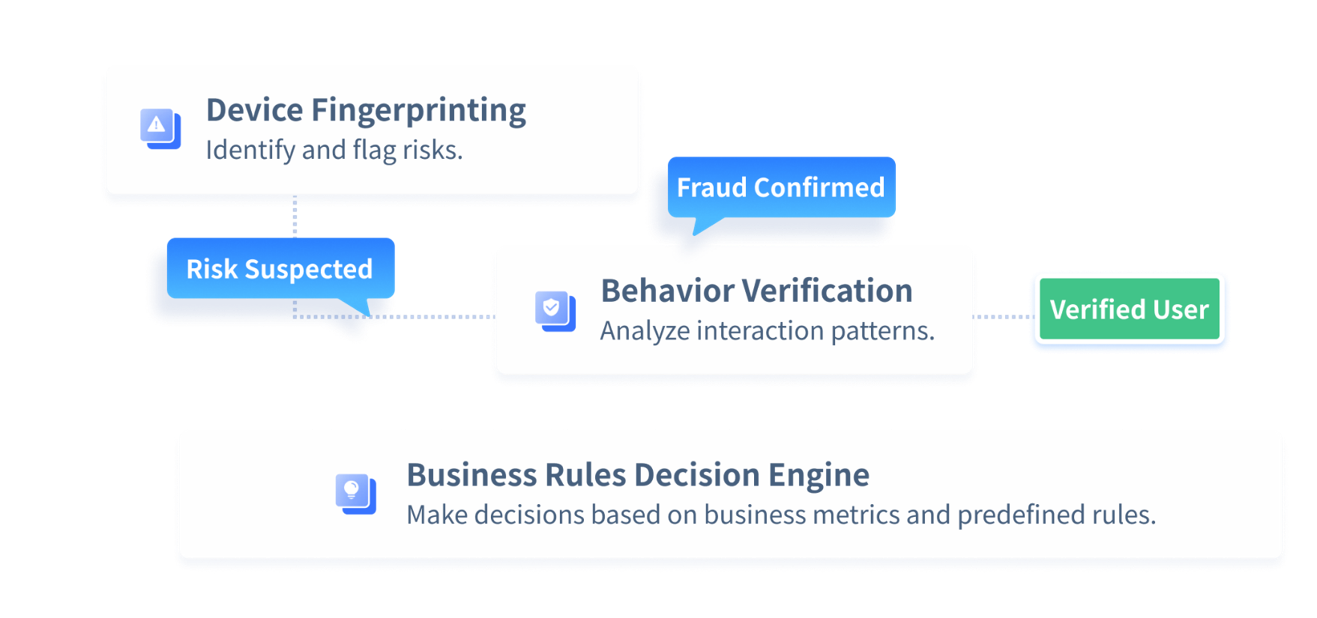

GeeTest Bot Management Platform: AI-Powered Anti-Bot Solutions

Leading anti-bot solutions like GeeTest Bot Management Platform combine behavioral analytics, device fingerprinting, and CAPTCHA to deliver comprehensive protection. This multi-layered approach ensures that games remain secure, fair, and enjoyable for all users.

- Smart defense against bot attacks with Behavior Verification: GeeTest's newest adaptive CAPTCHA combats sophisticated bots with dynamic security and offers a customizable design for a seamless experience for enterprises and end users.

- Accurate device ID & risk flagging with Device Fingerprinting: Revolutionize device recognition with advanced algorithms, set new standards for tracking accuracy, and utilize detailed risk dimensions for a holistic view of traffic patterns.

- Agile decisions with Business Rules Decision Engine: Combined with the existing risk management capabilities and business rules, build a flexible, comprehensive, and automated risk control system.

Whether you're starting from scratch, upgrading your current systems, or developing a comprehensive risk management solution, GeeTest can tailor its services to meet the needs of different types of games and developers.

Case Study

.png)

Background

Axie Infinity is a Sky Mavis-developed NFT- based online video game launched in May 2018. Turn-based role-playing game Axie Infinity (AXS) is the most popular, and best-known Play-and-Earn (P&E) blockchain gaming project in the world, attracting more than 1 million monthly active players.

Balancing Security and UX

With the rapid rise of Play & Earn games and the evolving Web3 landscape, bot attacks have become an inevitable challenge. Ensuring a secure yet seamless gaming economy is a top priority for the Axie Infinity security team. They require a solution that balances security and user experience while remaining compatible with various player devices.

Solution

- AI-driven behavior analysis to detect fake accounts and fraud

- Innovative verification methods to minimize user friction

- GeeTest’s cross-platform expertise ensures broad device compatibility

For more detailed case information, please refer to the articles.

Conclusion

Game developers in 2025 achieve the best results by combining multi-layered defenses, AI-driven detection, and behavioral analytics. Solutions like CAPTCHA and device fingerprinting remain essential for blocking automated threats. The GeeTest Bot Management Platform stands out by integrating these technologies with adaptive learning. Teams benefit from:

- Machine learning and behavioral biometrics that adapt to new bot tactics

- Continuous monitoring and regular updates to address emerging vulnerabilities

- Training and collaboration that foster a proactive, security-focused culture

A commitment to innovation and community engagement ensures fair, secure gaming environments for all players. Sign up for a free trial or contact us today, and see the difference GeeTest can make.

FAQ

What are the most common bot threats in online games?

Bot threats include automated cheating, resource farming, account takeovers, and market manipulation. These activities disrupt fair play and damage player trust. Game operators face increased security risks and economic losses from unchecked bot activity.

Why is CAPTCHA still effective for bot detection in 2025?

CAPTCHA challenges remain effective because they test for human traits bots struggle to mimic. Advanced systems like GeeTest CAPTCHA adapt to new attack patterns, making it difficult for automated scripts to bypass security checks.

How does device fingerprinting help block bots?

Device fingerprinting collects unique hardware and software details from each user. Security teams use this data to identify suspicious devices and block repeated bot attempts. This method works silently, providing seamless protection for genuine players.

What role does behavioral analytics play in bot detection?

Behavioral analytics monitors user actions, such as movement and input patterns. AI models analyze these behaviors to spot inconsistencies typical of bots. This approach increases detection accuracy and reduces false positives.

Which bot management solutions stand out in 2025?

GeeTest Bot Management Platform leads the industry. It combines behavioral analytics, device fingerprinting, and adaptive CAPTCHA. This multi-layered approach adapts to evolving threats and ensures robust protection for gaming environments.

Can bots bypass modern anti-bot solutions?

Some advanced bots use AI to mimic human behavior or spoof device fingerprints. However, multi-layered defenses that combine CAPTCHA, device fingerprinting, and behavioral analytics make successful evasion much harder.

How can developers reduce false positives in bot detection?

Developers use AI-driven models and continuous data validation to distinguish between bots and real users. Regular updates and layered detection methods help minimize false positives, ensuring a smooth experience for legitimate players.

GeeTest

GeeTest

Subscribe to our newsletter