AI Agents: The Cybersecurity Frontier and New Wave of Threats

11 Jun 2025 • 10 min read

AI Agents: The Cybersecurity Frontier and New Wave of Threats

11 Jun 2025 • 10 min read

AI is advancing, but so are the threats.

As artificial intelligence (AI) advances at an unprecedented pace, we’re entering an era where AI agents are rapidly becoming part of the digital fabric. Whether used in e-commerce, customer service, or content generation, these agents offer real-world efficiency gains.

However, this same evolution presents new challenges. Malicious actors are now deploying AI agents to automate cyberattacks, scrape sensitive data, and bypass traditional security mechanisms. The risks are tangible:

- According to Imperva’s 2024 Bad Bot Report, driven by the increasing popularity of AI and Large Language Models (LLMs), 32% of all internet traffic came from malicious bots in 2023.

- Gartner estimates that by 2027, AI agents will accelerate account exploitation, reducing the time by 50%.

- NETACEA reports that enterprises lose an average of $85.6 million annually due to automated bot-based threats.

This blog explores what AI agents are, how they work, the threats they pose, and most importantly, how businesses can build defenses to combat them.

What Is an AI Agent?

An AI agent is an autonomous or semi-autonomous software entity that perceives its environment, processes input, makes decisions, and takes actions to achieve specific objectives. These agents can operate reactively (responding to events) or proactively (planning future actions). Key distinguishing characteristics include:

- Autonomy: Operates independently, with minimal or no human intervention.

- Tool Augmentation: Integrates external APIs, databases, or tools (e.g., Selenium, SQL engines, web crawlers) to extend capabilities.

- Memory: Maintains short-term or long-term context, such as storing and retrieving relevant information (e.g., vector database of threat patterns).

- Planning: Capable of multi-step task decomposition and execution via iterative reasoning-action loops (e.g., ReAct paradigm).

- Reactivity: Responds to real-time changes in the environment or user input.

- Proactivity: Initiates actions independently to fulfill objectives or optimize outcomes.

- Sociality: Coordinates or collaborates with other agents or human users in a shared task environment.

The fundamental distinction between AI agents and traditional chatbots lies in capability and autonomy: While chatbots are typically limited to answering static queries within pre-defined boundaries, AI agents can dynamically plan and execute complex task chains, such as generating analytical reports, orchestrating multi-step workflows, or integrating cross-platform data—with minimal supervision.

Classifying AI Agents: Types and Characteristics

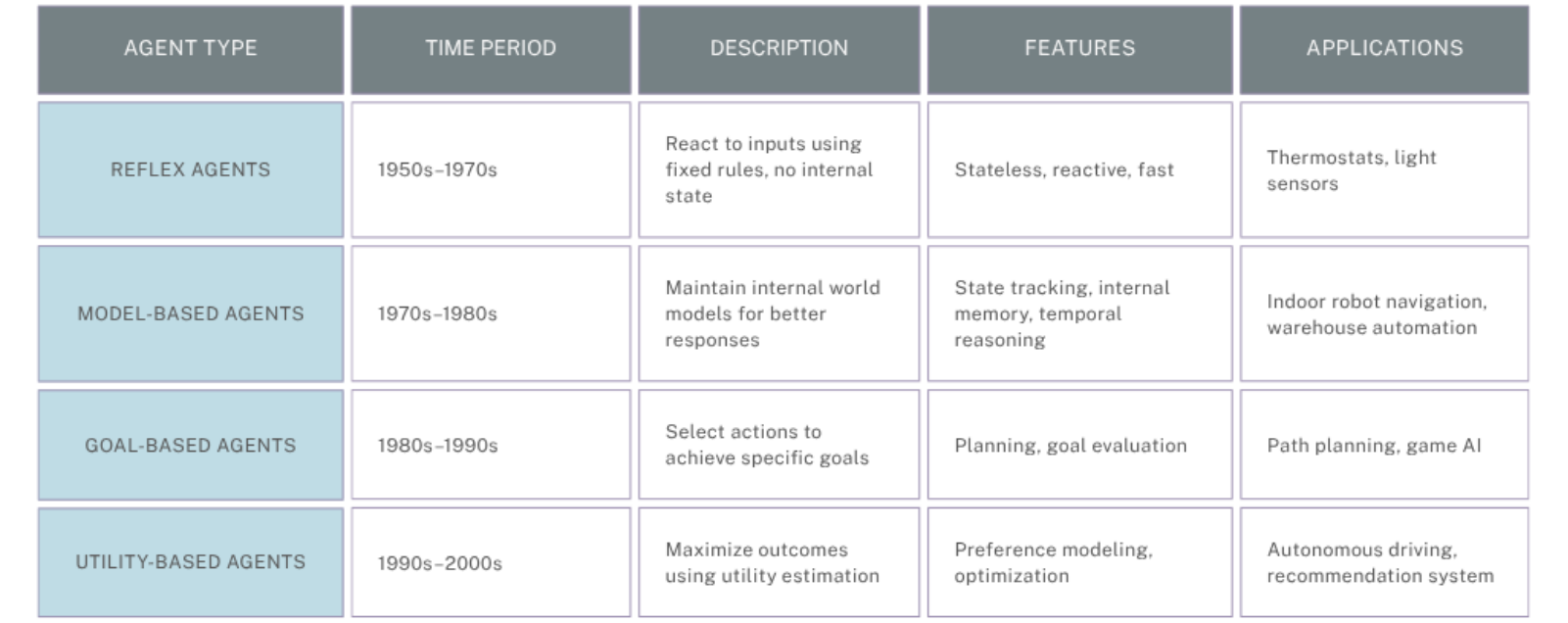

The foundational classification of AI agents—reflex-based, model-based, goal-based, and utility-based—was introduced in Artificial Intelligence: A Modern Approach by Russell and Norvig. These categories describe how agents make decisions based on perception, internal state, goals, and utility.

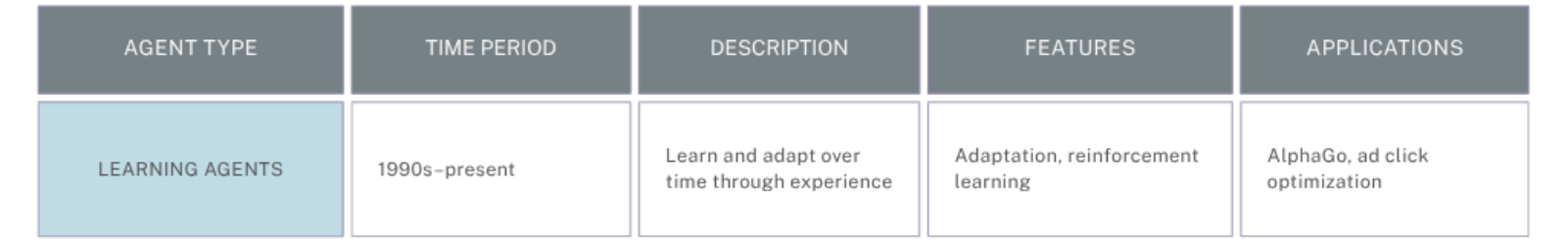

Learning, while not a standalone type, is a vital capability that can enhance any of the above agents, allowing them to improve over time through feedback and experience.

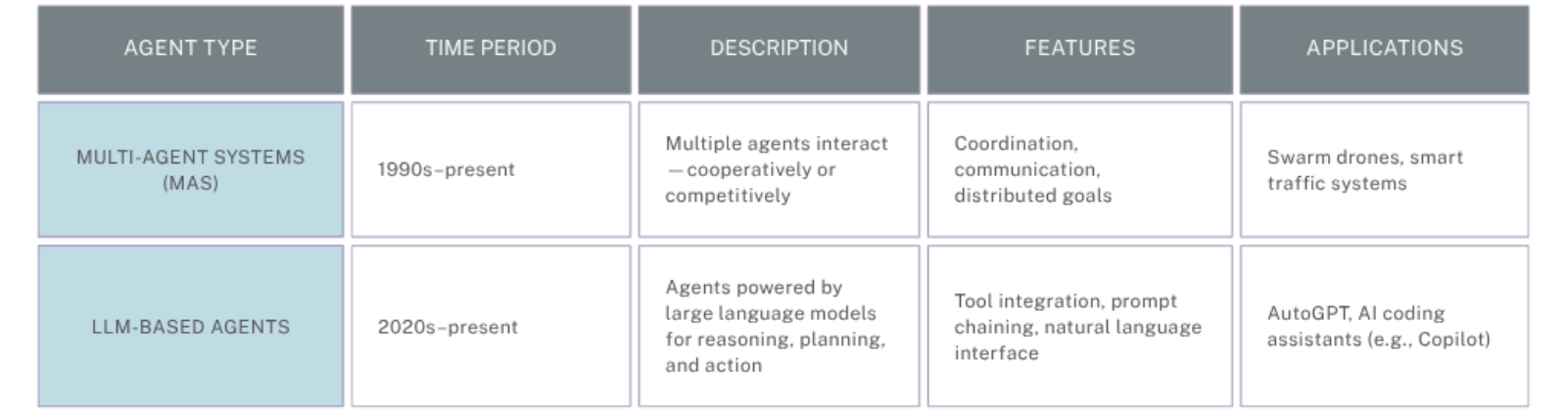

As AI systems evolve, new architectural paradigms have emerged. Multi-Agent Systems enable distributed intelligence through coordination and negotiation, while LLM-Based Agents harness large language models to reason, plan, and act using natural language interfaces. These are not new types, but rather new ways to implement and scale intelligent behavior.

The evolution of AI agents reflects a broader progression—from hardcoded rules to adaptive, collaborative, and language-driven autonomy.

Core Agent Types

Cross-Cutting Capability

Architectural & Implementation Trends

How AI Agents Work

System Architecture of AI Agents

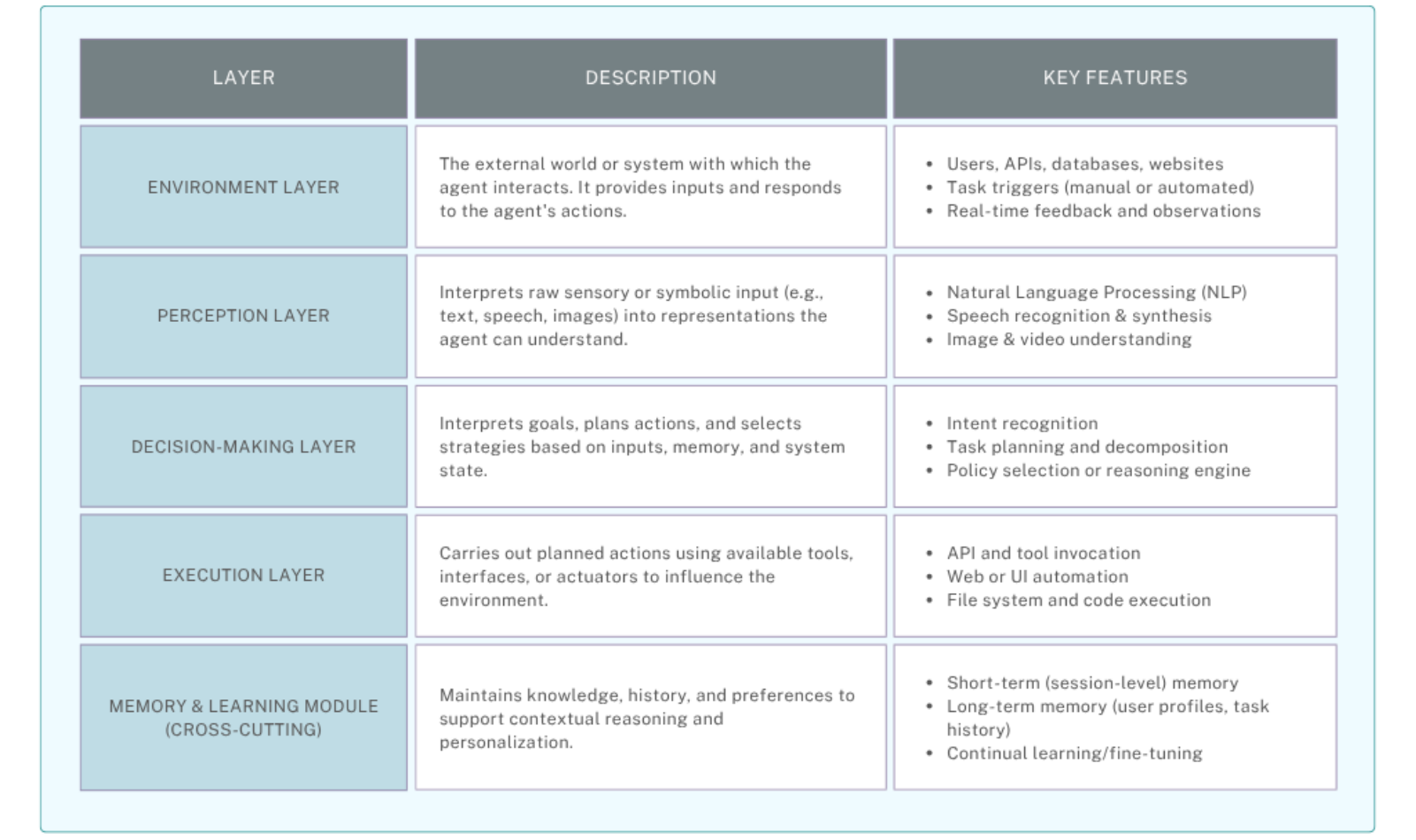

Building a robust AI agent requires a layered system architecture — just like a human: it senses, thinks, acts, and learns.

Core Components of AI Agents

A well-designed AI agent is composed of modular components, each responsible for a specific capability:

AI Agent Workflow Cycle

Step-by-Step Execution Example:

- Trigger: User request: "Schedule client meeting in Paris next Tuesday"

- Perception: Intent recognition (scheduling + location awareness)

- Planning:

- Check Paris timezone

- Verify attendees' availability

- Book conference room

- Tool Orchestration: execute_tools([CalendarAPI(), TimezoneFinder(), RoomReserver()])

- Memory Operation: Store "Client prefers video conferences"

- Output: "Teams meeting booked for 10:00 CET. Calendar invites sent."

- Learning: If meeting declined → log feedback → nightly retraining updates scheduling preferences

Security Threats Posed by AI Agents

As autonomous AI agents gain capabilities in reasoning, goal completion, and real-time interaction with digital systems, they introduce both opportunity and risk. When deployed maliciously—or simply uncontrolled—these agents can compromise digital infrastructure, exfiltrate data, and cause compliance violations.

Unauthorized Web Scraping of Proprietary or User Data

Threat: LLM-Augmented Web Scraping

Mechanism: AI agents utilize advanced parsing techniques (e.g., DOM tree analysis, semantic segmentation), large language models, and stealth crawling methods (e.g., rotating user agents, dynamic rendering) to extract structured and unstructured data at scale.

Risks & Impact:

- Unauthorized use of copyrighted or proprietary content

- Violation of terms of service and API agreements

- Fuel for training commercial LLMs without compensation

Real Case: In June 2025, Reddit filed a lawsuit against AI startup Anthropic, accusing it of illegally scraping Reddit content to train its Claude chatbot, violating the platform’s user agreement and robots.txt file.

AI-Generated Phishing and Social Engineering

Threat: Generative Phishing Automation

Mechanism: AI agents generate highly personalized phishing messages using publicly available personal and professional data (e.g., from LinkedIn), enabling psychological profiling and message crafting that mimics human tone and style.

Risks & Impact:

- Higher click-through rates, credential theft, and malware installation

- Bypassing traditional spam/phishing filters due to natural-language variance

Real Case: In March 2023, cybersecurity firm Darktrace reported that AI-generated phishing emails had significantly increased in sophistication, making them more convincing and harder to detect.

Infrastructure Strain and Denial of Service via Agent Crawling

Threat: AI Crawler-Induced Denial of Service (C-DoS)

Mechanism: AI agents with recursive or plugin-enhanced capabilities often crawl websites aggressively to extract data. When left unchecked, such activity causes abnormal spikes in traffic and server load, even when those sites are not intended for public API use.

Risks & Impact:

- Web servers experience increased latency or full outages

- Cloud and bandwidth costs surge for maintainers

- Smaller open-source projects suffer disproportionately from unsanctioned traffic

Real-World Case:

In 2025, developers behind the open-source Iaso project reported persistent crawling and resource drain caused by AI agents. To address the issue, they released a tool named Anubis, which applies proof-of-work challenges to make web crawling computationally expensive for autonomous agents.

AI-Driven Exploit Chains and Autonomous Attacks

Threat: Autonomous Exploitation via LLM Agents

Mechanism: Large language model (LLM)-driven AI agents (such as Auto-GPT or ReaperAI) can autonomously chain together complex tasks—like reconnaissance, vulnerability scanning, and payload delivery—without direct human intervention. These agents leverage reasoning loops and memory buffers to dynamically adjust their behavior.

Risks & Impact:

- Democratization of cyberattacks through low-code/no-code agents

- Use of zero-day vulnerabilities for automated breaches

- Emergence of deceptive agent behavior when under restriction or shutdown

Real-World Case:

In 2024, cybersecurity researchers demonstrated that GPT-4-powered agents successfully identified and exploited zero-day vulnerabilities in over 50% of tested web systems in a controlled environment, without human guidance.

Policy Circumvention and Terms of Service Violations

Threat: Compliance-Evasive Agent Behavior

Mechanism: AI agents simulate human browsing behavior, bypass robots.txt, employ headless browsers, spoof IP addresses, and execute JavaScript—thereby bypassing access restrictions or anti-bot protections.

Risks & Impact:

- Bypass of geo-blocking, age gates, rate limits

- Legal and reputational exposure for platforms

Real Case: In May 2022, the UK's Information Commissioner's Office fined Clearview AI £7.5 million for collecting images of people in the UK from the web and social media to create a global online database for facial recognition without consent.

How to Mitigate AI Agent Threats

While AI agents present novel and scalable threats, the cybersecurity community has developed a range of mitigation strategies—ranging from traditional protocols to emerging counter-AI mechanisms. However, no single defense is comprehensive. Effective protection against AI agents often requires a layered approach, balancing accessibility, compliance, and resilience.

Robots.txt and Access Control Headers

The robots.txt file is a de facto standard that instructs compliant crawlers which parts of a site should not be indexed or accessed. Similarly, X-Robots-Tag and robots meta directives can be used to control indexing behavior at the HTTP header or HTML level.

Effectiveness:

- Highly effective against ethical or commercial agents (e.g., Googlebot, Bingbot)

Limitations:

- No technical enforcement—compliance is voluntary, often ignored by malicious or unauthorized AI crawlers

- Does not stop scraping via headless browsers or LLM-augmented agents

CAPTCHAs and Human Verification Systems

CAPTCHAs (Completely Automated Public Turing tests) differentiate humans from bots by requiring perceptual or cognitive responses. Variants include text/image puzzles, invisible reCAPTCHA, and behavioral analysis.

Effectiveness:

- Still effective against basic crawlers and script-based bots

- Can slow down AI agents not optimized for visual interaction

Limitations:

- AI vision models (e.g., GPT-4V, Gemini) are increasingly capable of solving CAPTCHAs

- Traditional CAPTCHA has poor accessibility and UX for legitimate users

Anti-Bot Services

Cloud-based security providers like Cloudflare, Akamai, or GeeTest detect and block suspicious traffic using behavioral heuristics, device fingerprinting, and rate-limiting.

Effectiveness:

- Excellent for detecting non-human browsing patterns and blocking LLM-based crawlers

- Offers real-time anomaly detection and bot mitigation

Limitations:

- May inadvertently block legitimate users (false positives)

- High-performance AI agents that simulate user behavior can evade detection

Proof-of-Work (PoW) Mechanisms

Inspired by blockchain systems, PoW introduces artificial computational costs to deter mass automated access. PoW mechanisms force clients to solve computational puzzles before accessing server resources, effectively deterring mass automated crawlers.

Effectiveness:

- Highly effective at increasing the cost of mass scraping or autonomous reconnaissance

- Can selectively throttle agents that fail to solve tasks in real time

Limitations:

- Adds latency even for legitimate users

- Resource-intensive for both client and server

LLM-Specific Threat Detection Tools (Emerging)

Emerging tools detect LLM-generated traffic by analyzing semantic patterns (e.g., repetitive phrasing) or cryptographic watermarks embedded in AI outputs.

Effectiveness:

- Tools like Glaze (initially developed for protecting visual artists) are being adapted to watermark or disrupt AI crawlers at content level

Limitations:

- Largely experimental and not widely adopted

- May be outpaced by evolving agent models

Honeypots and Decoy Endpoints

Honeypots are fake content or endpoints inserted into webpages that are invisible to human users but detectable to bots. When accessed, these trigger alerts or auto-blacklisting suspicious clients.

Effectiveness:

- Useful for fingerprinting unknown agents and detecting stealth crawlers

- Can generate IP reputational data for blacklists

Limitations:

- Easy to avoid once the honeypot pattern is known

- Offers detection but not prevention

API Rate Limiting and Behavioral Throttling

Sets thresholds for how frequently a single IP or token can make requests within a defined window. Behavioral throttling also considers mouse movement, typing speed, and navigation depth.

Effectiveness:

- Reduces likelihood of mass automated querying

- Encourages use of formal API channels over scraping

Limitations:

- Easily bypassed with proxy pools or distributed AI agents

- May degrade the experience for power users

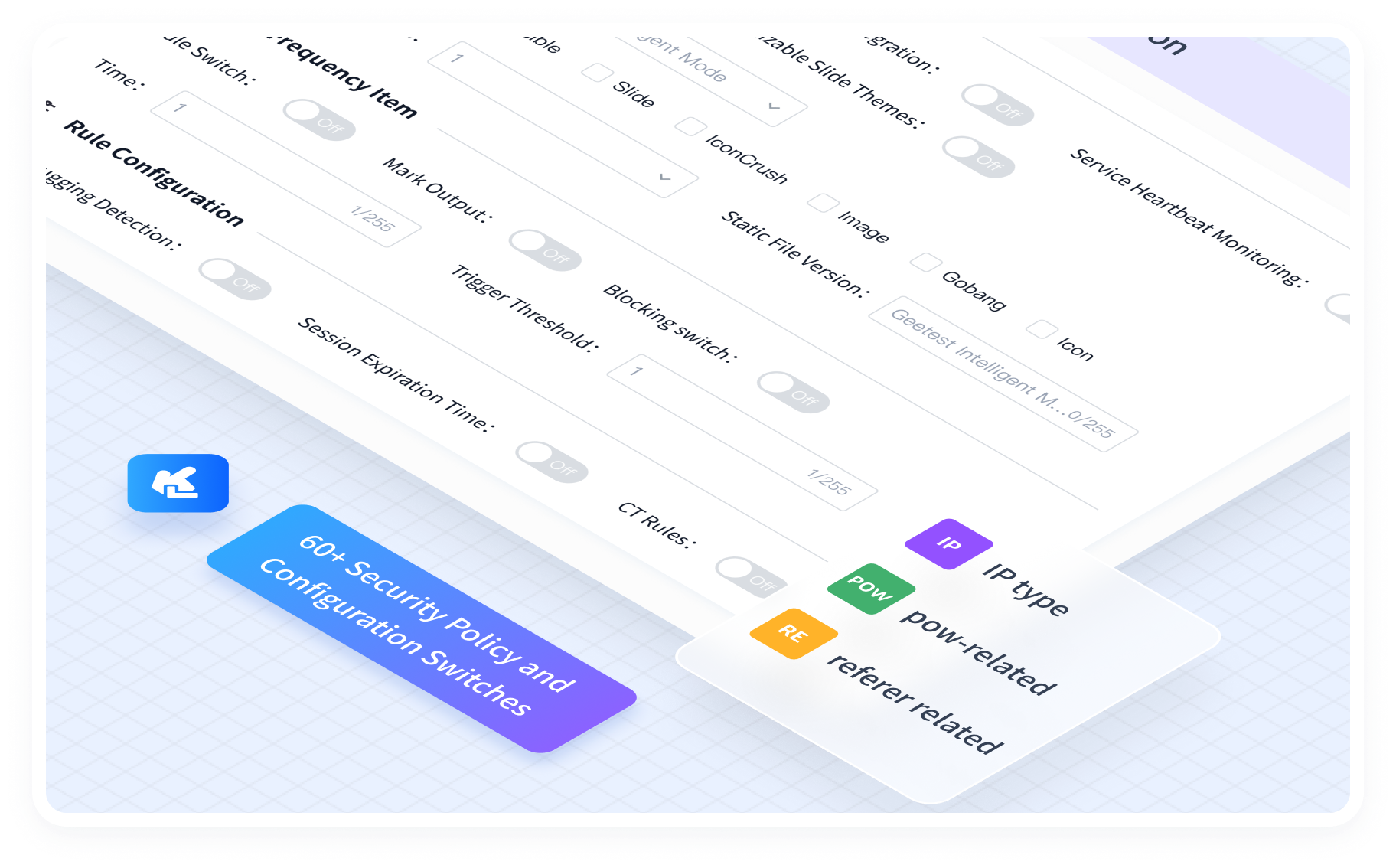

GeeTest's Defense Suite Against AI Agents

While AI agents introduce unprecedented threats, GeeTest leverages cutting-edge AI and adaptive security frameworks to stay ahead of malicious automation. We have developed a robust and adaptive defense suite that not only uses AI technology to detect and mitigate AI-powered threats, but also harnesses it internally to improve agility and responsiveness in the face of evolving cyberattacks.

AI-Driven Security Matrix

CAPTCHA used to be a common and cost-effective tool anti crawlers, but traditional CAPTCHAs crumble against LLM-vision models. GeeTest 4th-generation adaptive CAPTCHA operates as an AI vs. AI battlefield:

GeeTest AI Technology Matrix: Use AI-driven algorithm models to help detect abnormal patterns and feed insights into the machine-learning engine, ensuring accurate identification of malicious traffic.

7 Layer Adaptive Protection: Beyond CAPTCHA, but a solution. It implements a dynamic, multi-layered defense approach, designed to counter sophisticated, AI agent-powered threats by dramatically increasing their cost and complexity.

Customized, Targeted Security Strategy: Over 60 configurable security strategies, combined with PoW challenges, honeypots, and more, allow organizations to tailor defenses based on risk level, threat type, or attack vector—including API abuse, emulator-based automation, and IP spoofing.

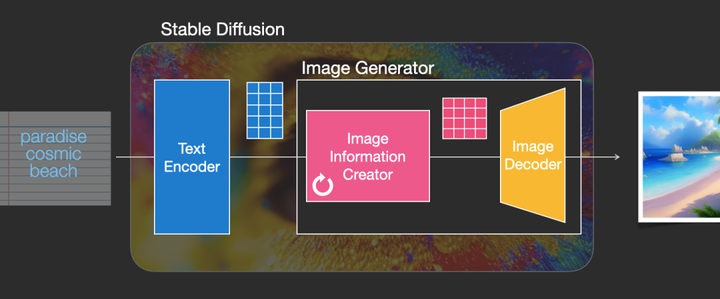

AIGC-Powered Image Generation and Defense

We leverage AIGC to dynamically refresh verification images, blocking dataset-based AI attacks and limiting the effectiveness of visual recognition models.

Automatic Validation Updates: We developed an automatic update system that refreshes up to 300,000 verification images hourly. Visual deviation and other processing make recognition hard for bots.

1.png)

Integrating Stable Diffusion with CAPTCHA Generation: We adapted the SD (Stable Diffusion) model for CAPTCHA generation significantly bolstered the security of these systems. SD's advanced latent diffusion techniques enable the production of complex verification images, overcoming the common vulnerabilities and inefficiencies of traditional CAPTCHAs.

Read more:

CAPTCHA Harvesting Alert: How to Break It

Decoding Image Model-based Cracking

Real-World Use Case

Key Takeaways

The primary objective of attackers is to maximize profit as efficiently as possible, far beyond legitimate users. Thus, AI agents naturally become their primary tool due to their ability to enhance attack efficiency significantly. With AI-powered tools, attackers can rapidly identify and bypass CAPTCHAs, enabling large-scale exploitation for financial gain.

- 1 Monthly vs. 1 Hourly Update: Most CAPTCHA providers update their image datasets only once a month, typically after a breach has occurred. This leaves them vulnerable to repeated attacks. In contrast, GeeTest enables tailored update strategies based on threat scenarios. From task creation to global deployment, GeeTest can complete an image update within minutes, which enables image dataset refreshes per hour, greatly increasing the operational cost for attackers.

- 10,000 vs. 300,000 Images: Typical providers release no more than 10,000 new images per update. With AI-powered tools, attackers can quickly decode them and build answer databases for repeated exploitation. GeeTest leverages AIGC technology to generate up to 300,000 images per hour, with dynamic updates occurring every second. This renders pre-built answer libraries ineffective, attackers waste resources without guaranteed returns, until they give up.

- Measurable Defense Impact: GeeTest’s real-time image refresh capabilities make it easier to monitor data variations and spot behavioral anomalies in the backend, helping to track evolving attack patterns, deploy effective countermeasures, and ensure the effectiveness of protection measures.

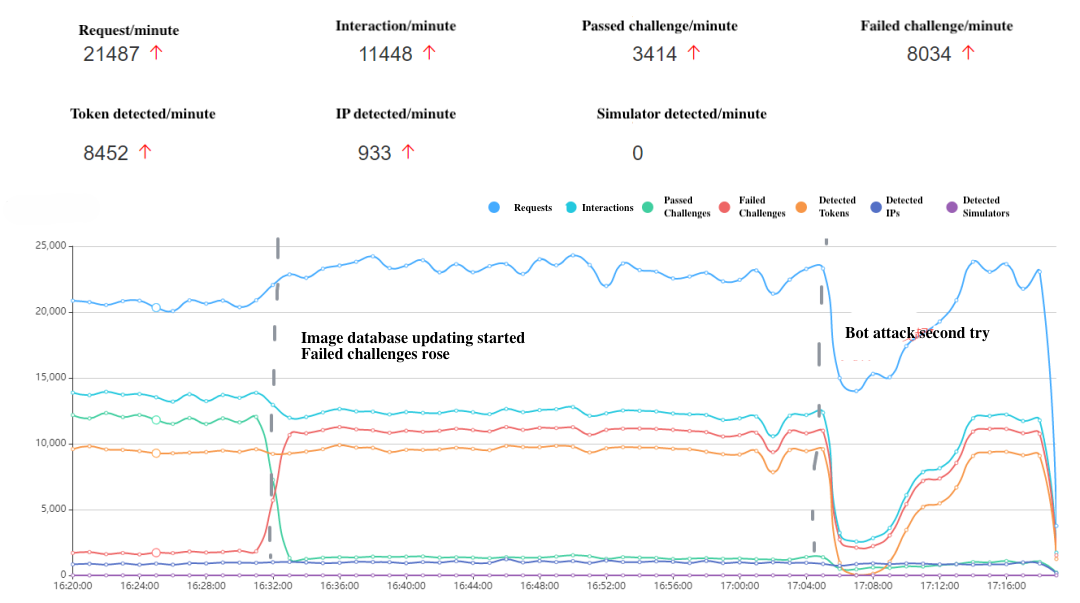

Case Overview

Company A, a representative e-commerce platform, faced persistent malicious SMS abuse during user login processes. To mitigate this, the security team deployed CAPTCHA ahead of a major sales event to block automated bot traffic. For the first two weeks, operations continued as usual, and CAPTCHA worked well in fending off bots.

Challenge

One evening, an unexpected surge occurred in CAPTCHA Requests, Interactions, and Passed Challenges. Strangely, the CAPTCHA seemed completely useless. An overwhelming volume of SMS messages inundated the system, draining service resources. At its peak, message consumption skyrocketed to over $2,800 per hour.

The company contacted their CAPTCHA provider, who responded by tweaking some images and introducing slightly more complex challenges. This initially reduced the success rate of bot interactions, but attackers quickly adapted. By the tenth day of the attack, the volume remained abnormally high.

Solution

Company A turned to GeeTest for support, and our security team quickly identified the issue as a CAPTCHA harvesting operation.

- 16:31, GeeTest updated the image database to mitigate further loss as soon as possible. Consequently, the Failed Challenges spiked, indicating the attackers' image answer database had been rendered useless. The Requests remained high, indicating continued attack attempts.

- 17:06, attackers noticed the disruption and temporarily ceased their Requests.

- 17:10, a second attack attempt was launched, but again failed to overcome the updated CAPTCHA, as the curves returned to their normal range.

GeeTest maintained hourly image database updates, eventually prompting attackers to abandon the campaign. Throughout the incident, Company A received no user complaints, confirming that legitimate users were unaffected.

Results

After GeeTest Adaptive CAPTCHA was deployed and the image database was updated, the curves for CAPTCHA Requests, Interactions, Passed Challenges, and Failed Challenges stabilized at Company A. This data returned to normal, allowing the company’s mid-year sales to proceed as planned.

Compared to the previous CAPTCHA provider, which updated its image database monthly, GeeTest’s hourly updates protected Company A from potential losses totaling tens of thousands of dollars.

Conclusion

AI agents are transforming both innovation and cyber threats. While they boost efficiency across industries, they also empower attackers with tools for automated exploitation, large-scale data scraping, and sophisticated phishing.

Traditional single-layer defenses can no longer keep up with these evolving risks. To stay secure, businesses must embrace adaptive, AI-driven security strategies (like GeeTest’s multi-layered protection) built specifically to counter AI-powered threats. In this new era of intelligent attacks, only equally intelligent and dynamic defenses can ensure resilience.

FAQ

Q1: What is an AI agent, and how is it different from traditional bots?

A: AI agents are autonomous systems capable of making decisions and executing tasks across multiple steps. Unlike traditional bots or chat assistants, they can plan, access tools, retrieve information, and adapt their strategies, making them far more capable and potentially more dangerous.

Q2: How are AI agents being used in cyberattacks?

A: Malicious actors are leveraging AI agents to automate phishing, data scraping, vulnerability scanning, CAPTCHA bypassing, and even denial-of-service attacks. These agents can continuously optimize their behavior, scale operations, and mimic human users to evade detection.

Q3: Are traditional defenses still effective against these threats?

A: No. Standard defenses—like static CAPTCHA, rate-limiting, or user-agent filtering—are often inadequate against AI agents. These tools can solve CAPTCHAs, adapt their behavior, and bypass common detection rules.

GeeTest

GeeTest

Subscribe to our newsletter